Optimizing advertising ROI: The ultimate guide to creative testing [+ example]

The internet is loud and busy. Every brand competes for the short attention span of potential customers online, and it will only get more crowded over time. According to Statista, ad spending will grow by 7.6% in 2024. In fact, in the US alone, digital ad spending will hit $306.94 billion, up from $270.24 billion this year.

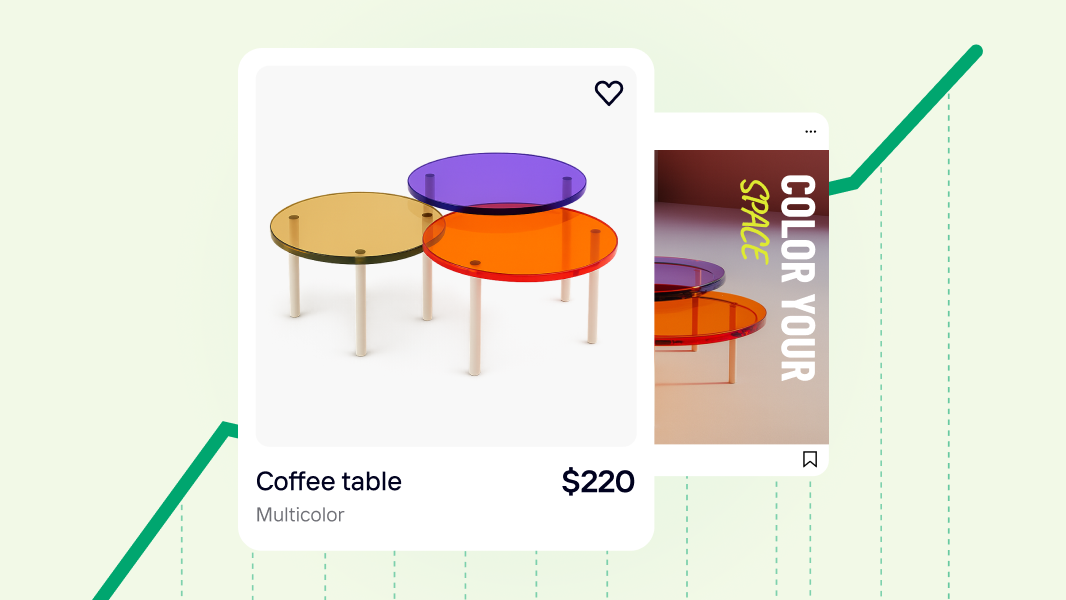

Fortunately, you don’t have to be a photographer or a design expert to create good ad photography; you can do this in no time using Photoroom’s AI-powered photo editor. To further ensure that your digital ads are of high quality and thus, will outperform the ads of your competitors, conduct creative testing.

Creative testing is a data-driven testing approach that accurately predicts how an ad will perform in-market. With the help of creative testing, you can determine which of your ad photos will be successful or not. Such information can then help determine which advertising concepts, in general, are effective and thus, deserve your support.

The article explores the challenges of standing out in online advertising and underscores the significance of data-driven creative testing, offering insights into:

The benefits of utilizing Photoroom's AI-powered photo editor for creating high-quality ad photography efficiently.

Various metrics, testing methodologies, and best practices to optimize ad performance through effective creative testing.

Benefits of conducting creative testing on ad photos

There are many benefits to creative testing. Some key benefits of conducting a creative test on ad photos are detailed below.

1. Prevents creative fatigue

One benefit of conducting a creative test on ad photos is that it prevents creative fatigue. This is because conducting creative tests on ad photos will help you discover which ones will receive the most engagement and conversions. By discovering what ad photos perform best, you’ll prevent yourself from wasting all your time and energy continuously creating underperforming ad images, or worst, continuously trying to make underperforming ad images work.

2. Prevents declining ad photo performance

Another benefit of conducting creative testing on ad photos is that it ensures an increase in ad photo engagement and, in turn, prevents declining ad photo engagement. Creative ad photo testing ensures increased ad photo interactions by providing data on which ad photos will receive more audience engagement. Therefore, by using the more engaging ad photos from your creative tests, you’ll prevent yourself from using ad photos that only decline in performance.

3. Helps you better resonate with your audience

You can see which ad images your audience prefers through creative ad testing. This information will help you continue to produce ad photos that resonate with your audience and thus, cause them to engage with your ads. By producing ad photos that resonate with your audience and make them click on your ads, you will also improve your return on ad spend.

4. Prevents you from wasting money

Another benefit of creative testing on ad photos is that it will help prevent you from wasting money producing ad photos that won’t work. This is because, through creative testing, you’ll receive data that proves which ad photos your audience engages with more. This information allows you to only spend money producing ad photos that will lead to conversions.

5. Provides you with customer insight

Because creative ad testing provides you with data on which ad images your audience, or prospective customers, engage with more, such testing provides you with insight into your customers’ behavior patterns. Such insight will then help you make better decisions regarding how to craft future ad photos that lead to successful advertising campaigns.

6. Helps you identify ad photo elements that lead to conversions

Not only does creative ad testing help you identify which ad images perform better, but it also helps you identify which elements of ad photos perform better. This is because some forms of creative testing can help you compare the performance of different ad photo elements. For example, some creative tests will allow you to compare whether an ad image leads to more engagement and conversions when the background color of that image is white versus when the background color of that same image is blue or red.

Therefore, creative testing can give you the ability to pinpoint which specific elements of your ad photos help with engagement and conversions versus which elements of ad photos don’t help with engagement or conversions. By deciphering which elements of ad photos are leading to engagement and conversions, you can make sure to incorporate only high-performing elements into your future ad photos.

7. Helps you to continue making successful ad photos in the future

One of the most impactful benefits of creative testing is that it provides you with all the information that you need to continue creating high-converting ad photos in the future. This is because creative ad testing on photos provides you with data on both what types of ad images and what specific elements of ad images lead to better ad performance. Thus, when used properly, creative testing on ad photos can provide you with the recipe for ad success.

What are creative testing metrics?

To accurately conduct a creative test on ad photos, you need first to understand what metrics to test and measure to determine ad photo engagement and conversion levels. There are various key metrics that you can measure in different creative tests. Some of the most important creative testing metrics to track and keep your eye on include the following:

Impressions

Impressions are the number of times your ad appears on a user’s screen. Impressions are the foundational metric of any ad campaign. Therefore, it’s imperative that anyone conducting a creative test first understands how to track ad impressions.

Three-second video viewers ratio

This metric calculates the percentage of viewers that watch an ad video for at least three seconds compared to total impressions. The higher the three-second video viewers ratio, the better the video ad performs.

Here at Photoroom, we launch hundreds of videos every week. Therefore, although a video ad focused metric, the three-second video viewers ratio is almost as useful to us as any ad photo measuring metric.

Average watch time

The average watch time metric measures how long someone watches, or views, your advertising content. You can measure the average watch time of any type of ad content from video to photo. The longer a person views your advertising content, the more engaging the content likely is. Thus, longer watch time of your advertising content signals higher engagement.

Link click-through rate (CTR)

Link click-through rate (CTR) is the percentage of ad viewers that clicked on the provided link in the ad or completed the desired action after engaging with your ad. A high link CTR means a very successful ad.

Cost-per-acquisition (CPA)

Cost-per-acquisition (CPA) is the total amount of money you spend to acquire a new lead or customer. To calculate CPA for a photo ad campaign, divide the total amount of money spent on the photo ad campaign by the number of new customers acquired from that same campaign. To ensure that your CPA is profitable, don’t spend more on a photo ad campaign than each lead or customer will, on average, spend on your products or services.

Cost-per-conversion (CPC)

Cost-per-conversion (CPC) is how much money you spend creating a successful conversion. To calculate CPC for a photo ad campaign, divide the amount of money spent on the photo ad campaign by the number of conversions.

The lower the CPC of a photo ad campaign, the more cost-efficient the photo ad campaign is. The CPC of any ad campaign can help you better understand how effective your advertising efforts are.

Ad spend

Ad spend is the total amount of money that you invest in your ad campaign. To profit from your photo ad campaign, you should budget your funds accordingly and not have an ad spend that’s more than what you’ll be able to make off of that same campaign.

Return on ad spend (ROAS)

A return on ad spend, or ROAS, is the total revenue, or money generated per advertising dollar spent. The revenue of an ad campaign is the amount of money generated from the ad campaign as a whole. Thus, to calculate ROAS, divide the revenue that you generated from an ad campaign by the ad spend.

What types of creative tests exist?

There are different types of testing methods that you can use to see how your ad is performing in-market. The key forms of creative testing are detailed below.

A/B testing

A/B testing is probably the most well-known form of creative testing. To conduct A/B testing for an ad, change one variable within the ad and then compare the performance of those two ad versions.

For example, to conduct an A/B test that determines what version of an ad photo leads to more engagement and conversions, and thus more profits, you could change one element within the ad photo, such as the photo’s background color. Then, you could run both ad versions to see how much engagement each receives.

By comparing the data from the performance of two ads that are identical except for the background color of the ad’s photo, you’ll be able to clearly determine which image background color better resonates with your audience.

Multivariate testing

Multivariate testing simultaneously compares the performance of multiple variables within an ad. For example, you can conduct multivariate testing of an ad photo by changing the background color, the shadowing, and the image size and comparing the performance of different versions of the same ad with all these variating photo elements. While complex, multivariate testing allows you to examine the collective influence of various ad photo elements.

Split testing

While the term split testing is often used interchangeably with the term A/B testing, technically, these two types of creative tests differ. To conduct split testing for a photo in an ad, first develop a control version of that ad’s photo and then one completely different version of the ad photo.

Then, divide your traffic into two portions and send one portion of your traffic to the ad with the control version of the photo and the other portion of your traffic to the ad with the completely new version of the photo. Then, compare how the two different portions of your traffic respond to the two different ad versions.

Lift testing

Lift testing or incrementality testing determines the true impact of an ad by comparing the performance of a control ad that hasn’t been exposed to the ad campaign to an ad with the ad campaign. In terms of ad photos, a lift test would compare the performance of a control without the ad photo to a version of the ad with the ad photo.

Creative testing process

There is a process to conducting creative tests. To accurately conduct creative tests on ad images, follow the steps below.

1. Do a gap analysis

The first step in ad image creative testing is to perform a gap analysis. To perform an ad photo gap analysis, go through all your ad campaigns and identify gaps in opportunities in types of ad photos, ad photo formats, audiences that you could target with your ad photos, etc. Once you’ve identified the gaps in your ad photo campaigns, you can bridge those gaps and maximize the impact of your ad photos.

2. Set your goals/KPIs and hypotheses

The next step in the creative testing process is to set goals and key performance indicators (KPIs) for yourself. That way you know exactly what you want to achieve with your photo ad campaigns. This is also when you need to develop hypotheses, or educated guesses, about what ad photo elements will perform the best.

3. Create multiple ad variations

Now is the time to create different ad photo variations. Each ad photo variation can test different ad photo elements. The more ad photo variations you create, the more you’ll likely receive audience responses that will help you better understand your audience’s preferences.

4. Choose a testing methodology

The next step in the creative testing process is to choose the type of creative test that you want to use to measure the success of your ad image campaign. Can you better tell the success of your ad images from an A/B test, multivariate test, split test, or lift test? The decision is yours to make.

5. Set up and launch test

Next, you need to set up and launch the form of creative testing that you chose. During this step in the creative testing process, it’s important to remember to set up testing parameters. This includes ad distribution, audience targeting, and the set length of time that the test will occur. Once your testing parameters are set, you can launch your creative test and engage with your target audience.

6. Analyze ad performance

Once the ad campaigns in your creative test start running, you can collect and analyze the data from the test. When analyzing ad performance data, you should build custom dashboards to visualize and interpret your KPIs and performance metrics. That way it can be easier for you to understand which ad elements are driving success and which are not.

7. Continue to optimize

Once you’ve launched your creative test and analyzed the ad image performance from it, continue to optimize your ads and their images based on the information you learned. Doing this will help you maintain successful ad image performance. This is especially true as the needs or preferences of your audience change.

Creative testing best practices

To optimize the success of your creative tests, there are some best practices that you should adhere to. Some creative testing best practices include the following:

Have a solid hypothesis

When creative testing, it’s important to clearly understand what you want to learn from your testing results. The more specific and focused a hypothesis is, the easier it will be to pick accurate variables to test, which, in turn, will lead to more reliable data.

Set an appropriate budget

Another creative testing best practice is setting an appropriate test budget. You can determine the ideal budget for an A/B test by multiplying the cost of each acquisition by the number of conversions. For example, if you have a $10,000 conversion goal and a CPA of $2.00, your total testing budget should be $20,000. To ensure that your results aren’t skewed due to more attention being given to one aspect of the test, have a similar budget for each component of your creative test.

Choose A/B testing if you’re a beginner at conducting creative tests

Creative testing can be complicated. Therefore, if you’re a beginner at creative testing, do yourself a favor and conduct an A/B test. A/B testing is best for creative testing beginners because it’s the most well-known and easy to comprehend and track.

Develop a creative testing roadmap

When conducting more complicated creative tests or running several different creative tests simultaneously, you’ll benefit from developing a testing roadmap. A testing roadmap will lay out the testing plan and how each component within the ad test will influence one another.

Use automated testing tools

Another creative testing best practice is using automated testing tools wherever possible. This is a very important creative testing best practice for many reasons. One is that automated testing tools can save you time and energy due to you not having to manually conduct certain aspects of the creative test. Another benefit of using automated testing tools for creative testing is that it decreases the chances of error when measuring certain aspects of the test.

Avoid exposing your test audience to other ads

When conducting a creative test on an ad image campaign, it’s best not to expose the audience of your creative test to other ads and their images while the test is going on. This is because the other ads could alter audience behavior.

Thus, the data and results that you’ll receive on audience engagement and behavior due to your creative ad tests won’t be reliable or accurate. The best practice when conducting a creative test is to set a fresh audience for every new round of creative testing.

Don’t compare your current ad campaign to older ad campaigns

When conducting a creative test, you also shouldn’t compare the ad image campaign that you’re testing to other older ad image campaigns. This is because other ad image campaigns could have been impacted by various variables that your current ad image campaign has not. Therefore, your best bet when creative testing is to either create a whole new ad image campaign or clone an ad image campaign.

Don’t rush your creative test

When conducting a creative test, make sure not to rush things. This is because your creative test needs time to generate meaningful results. Rushing a creative test could also cause you to make mistakes when documenting, collecting, or analyzing data from the test.

Perform ongoing testing and keep your data

Once you’ve received the results from your first creative test, use these results to perform ongoing testing. Ongoing testing and optimizing of ads and their photos can help you maintain ad success. It can also help you stay on top of any ad changes you’ll need to make in the future due to changes in audience behavior or preferences. When creative testing, keeping and organizing all data you receive is best. That way you can consult some of this data when making ad changes or conducting future campaigns.

Photoroom example of A/B/C creative testing

In a previous Facebook ad test, we here at Photoroom explored the impact that Photoroom ad images can have on ad performance by polishing the background of product pictures. Today, we’re sharing a follow-up with the results of a recent A/B/C test we conducted on Facebook ads. This time, we looked at placing objects in their contextual environment, specifically furniture.

Our new objective: Identify the winning ad variant that could improve click-through rates (CTR) and reduce costs per click (CPC). For this experiment, we generated three image variants in seconds to represent our A, B, and C test groups. They looked like this:

Variant A showcased the original image with a white background

Variant B featured an image enhanced only using our Instant Shadows tool

Variant C presented a generative AI background that placed the product in a contextual setting, specifically within a stylish living room.

The ad copy, audience targeting, and landing page remained consistent across all three variants, ensuring that the only variable under scrutiny was the image itself.

Running the test

The A/B/C test spanned over two weeks, allowing us to gather substantial data and draw reliable conclusions. Throughout this period, we closely monitored each ad variant's performance metrics to determine which resonated most effectively with our target audience.

How the variants performed

The findings were both illuminating and affirming.

Ad variant C, featuring the product in a contextual living room setting, emerged as the clear winner.

It boasted the highest CTR: +37% better than A and +67% better than B

It resulted in the lowest CPCs: +34% improvement over A, and a +52% improvement over B, providing us with statistically significant results with a 95% confidence interval.

Lessons from the test

The short version is that it confirmed our hypothesis and proved the importance of challenging assumptions about how audiences interact with ads and what they expect from the quality of the photography.

It confirmed our hypothesis: Potential customers prefer to see products in a contextual setting—particularly furniture. The ability to visualize how an item complements their living space proved to be a key factor in driving engagement and conversions. By allowing prospects to assess how a purchase fits into their own surroundings, we create a more compelling and personalized shopping experience.

The power of high-quality imagery can’t be understated: The results of this A/B/C test reinforce the importance of using seamless, professional-level imagery in your ads. By presenting your products in a visually appealing and contextually relevant way, you can significantly improve engagement and conversion rates.

The value of data-driven decision-making: Conducting A/B/C tests allows you to make data-driven decisions based on real performance metrics. By testing different variants and closely monitoring the results, you can learn what your audience actually responds to and adjust your strategy accordingly.

Partner with us on your next campaign

Stay tuned for more tests where we research ad performance across different product categories. We're inviting partners from various categories like furniture, cosmetics, health, fashion, and retail to share images that we can incorporate into our next round of ad tests. In return, we'll cover the ad expenses.

You can even use Photoroom API to edit ad images for your creative tests. For example, you can use Photoroom API to change the creative test variables of ad image background color, shadowing, and size.

Ongoing creative tests aim to prove that the quality of the visual elements of an ad drives traffic and translates into meaningful conversions. As we continue to explore and refine our approach here at Photoroom, we look forward to sharing more insights that can empower your advertising strategies.

Ready to partner with us to optimize your ad assets? Contact us now.

Check out our Photo Editor API offerings:

![Optimizing advertising ROI: The ultimate guide to creative testing [+ example]](https://storyblok-cdn.photoroom.com/f/191576/2700x900/2811afe0c8/photoroom_delivered_a_34_improvement_for_ads_l_.webp)

Design your next great image

Whether you're selling, promoting, or posting, bring your idea to life with a design that stands out.

Keep reading

Sell faster with studio‑quality product visuals

Drive sales with professional visuals you can create in minutes, with brand consistency and control.